Mathematics, Statistics, and Computer Science Department

Supporting Students, Embracing Challenges

Our mission is to support and nurture our students in their own journey so that they can become life-long learners. Our classrooms focus on:

- Developing problem solvers and communicators of mathematical ideas who can demonstrate their enduring understandings.

- Fostering critical thinking so that our students can make connections and extend their learning beyond the classroom.

- Giving our students agency by encouraging a growth mindset, embracing challenges and risk-taking, growing from mistakes, and focusing on the process of learning.

- Celebrating diversity of thought and experience.

- Actively seeking to uplift the voices of our underrepresented students and finding ways to highlight diversity within the mathematical community.

- Sharing our passion for the subjects to inspire an appreciation for the beauty, precision, relevance and systematic efficiencies of our disciplines.

Mathematics

Statistics

Computer Science

Mathematics

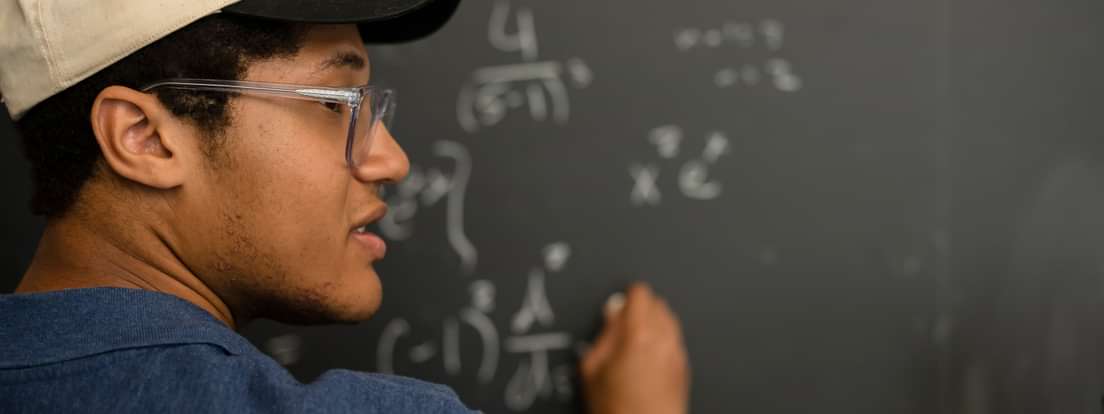

Wherever a student is in their mathematical learning journey, we seek to meet them there. We offer a unique entry point into our vast curriculum that meets each student's needs. Our faculty is eager to work with each student to challenge them to grow beyond what they thought possible. Together, our students learn to reason through problems both quantitatively and graphically. They learn to use multiple representations to explain and describe mathematical relationships as well as apply models to interpret physical, social and mathematical phenomena. In addition, they extend their prior knowledge to make connections to newfound concepts. We encourage our students to grapple with ideas and to appreciate that making mistakes is an important part of learning. Finally, we push our students to lean into their classroom community to learn both with and from each other, knowing that diverse perspectives are important.

Sample Courses:

- Integrated Algebra and Geometry (Problem Solving and Proof)

- Precalculus

- AP Calculus (AB/BC)

- Multivariable Calculus

- Linear Algebra

- Honors Mathematics Seminar

MSC Study Center

Available Mon-Thurs during evening study hours. Tutors and a faculty member are available to answer questions and support student learning.

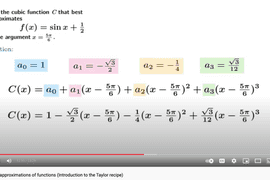

A Calculus Playlist

Chris Odden’s library of videos support a flipped classroom as students benefit from detailed and often humorous explanations of concepts.

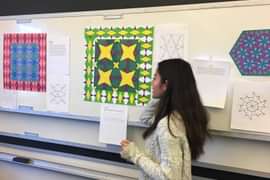

Math in Action

Students extend their learning to measure tall objects, build apps, and create beautiful mathematics patterns.

Statistics

Data is all around us and learning to interpret it, analyze it, and make predictions helps us to understand the world in new ways. Our students not only learn the statistical skills to work with data, but they personalize their learning by investigating topics relevant to their own lives. They learn how powerful and important the study of statistics is in today’s world. Our teachers encourage students to use R to solidify their statistical understanding and explore data visualizations.

Our department offers students opportunities to study statistics in both term contained and year-long courses. Both our AP Statistics and Project-Based Statistics courses prepare students for the AP exam.

Sample courses:

- AP Statistics

- Project-Based Statistics

- Statistics for Social Justice

Project-Based Statistics

This service-learning based statistics course enables students to “bring numbers to life” in collaboration with the PA community.

Opportunity Insights

Faculty were the first high school teachers in the country to pilot modules from this Big Data course with the Harvard teaching team.

American Statistical Association Awards

Students can compete in the ASA’s poster project contest. We have had many students win the contest over the years.

Computer Science

Our computer science curriculum is robust and evolving to reflect current trends in the field. We have courses that introduce new students to the field of computer science as well as those to nurture the curiosity and interests of our most seasoned programmers. We welcome all students to explore computational thinking and each of our courses matches the experience of students and their readiness for challenge. There are interesting, meaningful course topic choices at all levels of the curriculum.

Sample courses:

- Machine Learning

- Data Visualization

- Data Structures and Algorithms in C

- AP Computer Science

- Web App Development

Our Faculty

Learning happens across all aspects of our campus. Andover's faculty are subject matter experts, mentors, stewards of Knowledge & Goodness, and much more. Joel Jacob is the Department Chair; Heidi Wall is the Assistant Chair.

Nikki Cleare

Khiem DoBa

Mr. Amanfu mostly teaches precalculus and calculus and when not in the classroom, he coaches intramural soccer and recreational soccer where he gets to observe our student's other amazing personalities. He lives in Stearns House with my family where he is the house counselor to 9th and 10th grade boys.

Chase Chamberlin

Teaching Fellow in Mathematics, Computer Science & Statistics, Rockwell House Counselor, JV Football Coach, Lacrosse Coach [email protected] view full profile“My goal for mathematics is not only to see the world differently but have mathematics shape the world we live in.”

Meghan Clarke

Instructor of Computer Science & Math, House Counselor, Advisor, Tang Fellow [email protected]Mrs. Clarke loves sharing her passion for Computer Science and Math with students. She is also excited for her new role as resident house counselor in Bertha Bailey where she lives with her husband and two daughters.

Courses

CSC413 | Digital Media Computing

This course provides an introduction to computational thinking through the creation and manipulation of digital sounds and images. Students will learn how media files are stored on a computer system and use this knowledge to create projects centered around digital media. Topics could include compression, generative art and music, filtering, and data encoding.

Noureddine El Alam

Math Instructor, Girls Varsity Soccer Coach, EBI Instructor, House Counselor and Advisor, Muslim Student Association Advisor [email protected]Mr. El Alam has a passion for teaching, coaching, and mentoring young people. In the classroom, dorm, and on the soccer field, he looks for teachable moments to impart life lessons on decision making, problem solving, and working for the common good.

IN THE CLASSROOM:

Financial Literacy Seminar | MTH 440

Students who sign up for this course will be able to utilize their skills, passion, and creativity in a way that will definitely make an impact on the world. The instructor will present and explore models theoretically and practically to promote fiscally responsible behavior. Students will read and discuss several short books and research and design collaborative projects to demonstrate proficiency of concepts learned and to help develop a solid foundation of critical financial skills. Concepts will include a wide array of topics, including budgeting, writing and pitching business plans, marketing, prototyping, project planning, balance sheets, income and cash flow statements, resume writing, online advertising and social media marketing, graphic design, philanthropy, and much more.

With the guidance of the instructor as well as mentors and specialists, students will use the “design thinking” process to identify a problem of a social nature and follow all the steps necessary to provide feasible and scalable solutions. Working to solve a problem creatively and logically will ignite their entrepreneurial spirit. When possible, field trips will include company tours, shareholder meetings, and visits to 46 Return to Table of Contents brokerage firms. Guest speakers such as financial planners, business leaders, accountants, artists, and actuaries will speak to students and share their expertise.

Patrick Farrell

Instructor of Mathematics, Statistics and Computer Science, Indoor and Outdoor Track Assistant Coach, Day Student Advisor, Dorm Complement [email protected] view full profileMr. Farrell has been sharing his love of mathematics - and recently computer science - with young people for 40 years. He is equally passionate about issues of social justice having served on both the Access to Success and Antiracism task forces and taught six summers in the school's Mathematics and Science for Minority Students program and four summers in the ACE program.

Miss Fulford teaches all levels of mathematics. She runs the dorm Eaton Cottage which is also known for the garden surrounding the dorm, named "The Garden of Eaton" by the members of the class of ’07.

17

entry points

33

faculty and staff

15

students per class

7

computer science electives

Related Student Clubs

Math Team

CS Club

Robotics Club

Techmasters

VEX Robotics

3D VR Club